Table of Contents

[ad_1]

PyTorch is an open supply, device understanding framework used for equally research prototyping and manufacturing deployment. In accordance to its resource code repository, PyTorch offers two large-level characteristics:

- Tensor computation (like NumPy) with robust GPU acceleration.

- Deep neural networks designed on a tape-based mostly autograd process.

Initially made at Idiap Research Institute, NYU, NEC Laboratories The united states, Facebook, and Deepmind Systems, with input from the Torch and Caffe2 initiatives, PyTorch now has a thriving open resource neighborhood. PyTorch 1.10, released in Oct 2021, has commits from 426 contributors, and the repository at present has 54,000 stars.

This post is an overview of PyTorch, together with new capabilities in PyTorch 1.10 and a brief guideline to obtaining begun with PyTorch. I’ve formerly reviewed PyTorch 1..1 and in contrast TensorFlow and PyTorch. I propose examining the evaluate for an in-depth dialogue of PyTorch’s architecture and how the library performs.

The evolution of PyTorch

Early on, academics and researchers had been drawn to PyTorch for the reason that it was a lot easier to use than TensorFlow for design enhancement with graphics processing models (GPUs). PyTorch defaults to keen execution manner, that means that its API phone calls execute when invoked, alternatively than currently being additional to a graph to be operate later. TensorFlow has because enhanced its assistance for keen execution method, but PyTorch is however well-liked in the educational and research communities.

At this level, PyTorch is manufacturing completely ready, letting you to transition conveniently involving keen and graph modes with TorchScript, and accelerate the route to creation with TorchServe. The torch.dispersed back again conclude enables scalable dispersed instruction and general performance optimization in analysis and output, and a wealthy ecosystem of applications and libraries extends PyTorch and supports improvement in personal computer eyesight, purely natural language processing, and extra. Finally, PyTorch is nicely supported on significant cloud platforms, including Alibaba, Amazon Net Services (AWS), Google Cloud System (GCP), and Microsoft Azure. Cloud guidance presents frictionless growth and simple scaling.

What’s new in PyTorch 1.10

In accordance to the PyTorch site, PyTorch 1.10 updates concentrated on improving upon instruction and efficiency as well as developer usability. See the PyTorch 1.10 launch notes for facts. Below are a couple of highlights of this release:

- CUDA Graphs APIs are built-in to reduce CPU overheads for CUDA workloads.

- Several entrance-conclusion APIs such as Forex,

torch.specific, andnn.Moduleparametrization ended up moved from beta to secure. Forex is a Pythonic platform for transforming PyTorch applicationstorch.distinctiveimplements particular functions such as gamma and Bessel functions. - A new LLVM-based mostly JIT compiler supports automatic fusion in CPUs as well as GPUs. The LLVM-dependent JIT compiler can fuse jointly sequences of

torchlibrary phone calls to increase general performance. - Android NNAPI support is now obtainable in beta. NNAPI (Android’s Neural Networks API) permits Android apps to run computationally intense neural networks on the most strong and efficient elements of the chips that electrical power cell phones, such as GPUs and specialized neural processing models (NPUs).

The PyTorch 1.10 launch involved about 3,400 commits, indicating a task that is active and focused on increasing effectiveness by way of a variety of solutions.

How to get began with PyTorch

Looking at the edition update release notes will not likely tell you a great deal if you really don’t fully grasp the fundamental principles of the job or how to get begun utilizing it, so let’s fill that in.

The PyTorch tutorial page delivers two tracks: Just one for those people acquainted with other deep understanding frameworks and a person for newbs. If you need the newb observe, which introduces tensors, datasets, autograd, and other vital concepts, I counsel that you follow it and use the Run in Microsoft Understand solution, as proven in Figure 1.

IDG

IDGFigure 1. The “newb” keep track of for understanding PyTorch.

If you happen to be currently acquainted with deep understanding principles, then I suggest functioning the quickstart notebook shown in Determine 2. You can also simply click on Operate in Microsoft Learn or Operate in Google Colab, or you can run the notebook regionally.

IDG

IDGFigure 2. The sophisticated (quickstart) observe for discovering PyTorch.

PyTorch assignments to check out

As shown on the still left side of the screenshot in Figure 2, PyTorch has tons of recipes and tutorials. It also has many styles and illustrations of how to use them, normally as notebooks. 3 tasks in the PyTorch ecosystem strike me as specially intriguing: Captum, PyTorch Geometric (PyG), and skorch.

Captum

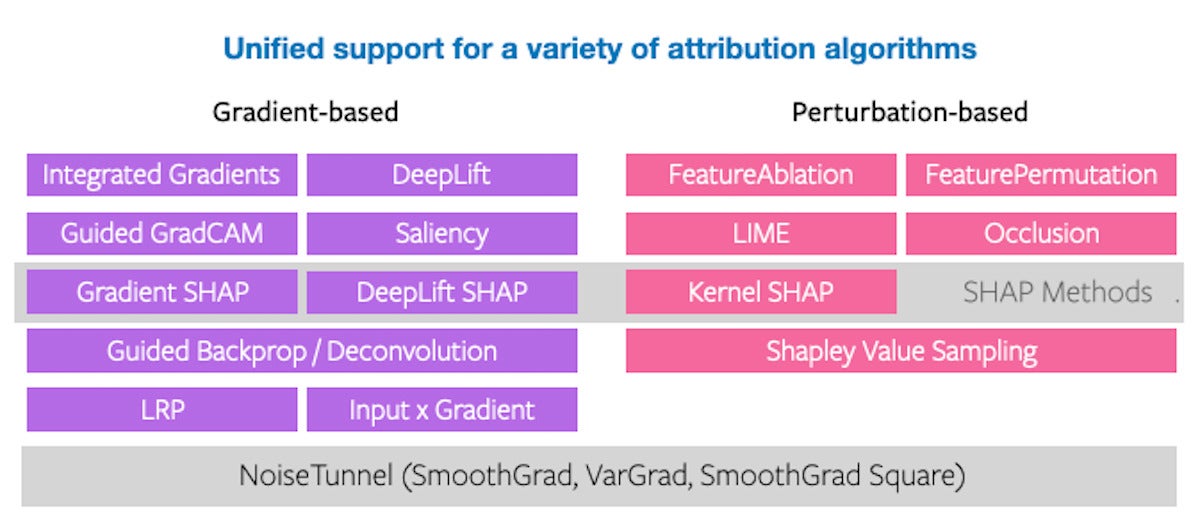

As pointed out on this project’s GitHub repository, the phrase captum indicates comprehension in Latin. As explained on the repository website page and somewhere else, Captum is “a model interpretability library for PyTorch.” It is made up of a wide variety of gradient and perturbation-based attribution algorithms that can be used to interpret and fully grasp PyTorch styles. It also has fast integration for styles crafted with area-particular libraries this sort of as torchvision, torchtext, and some others.

Figure 3 demonstrates all of the attribution algorithms at this time supported by Captum.

IDG

IDGFigure 3. Captum attribution algorithms in a desk format.

PyTorch Geometric (PyG)

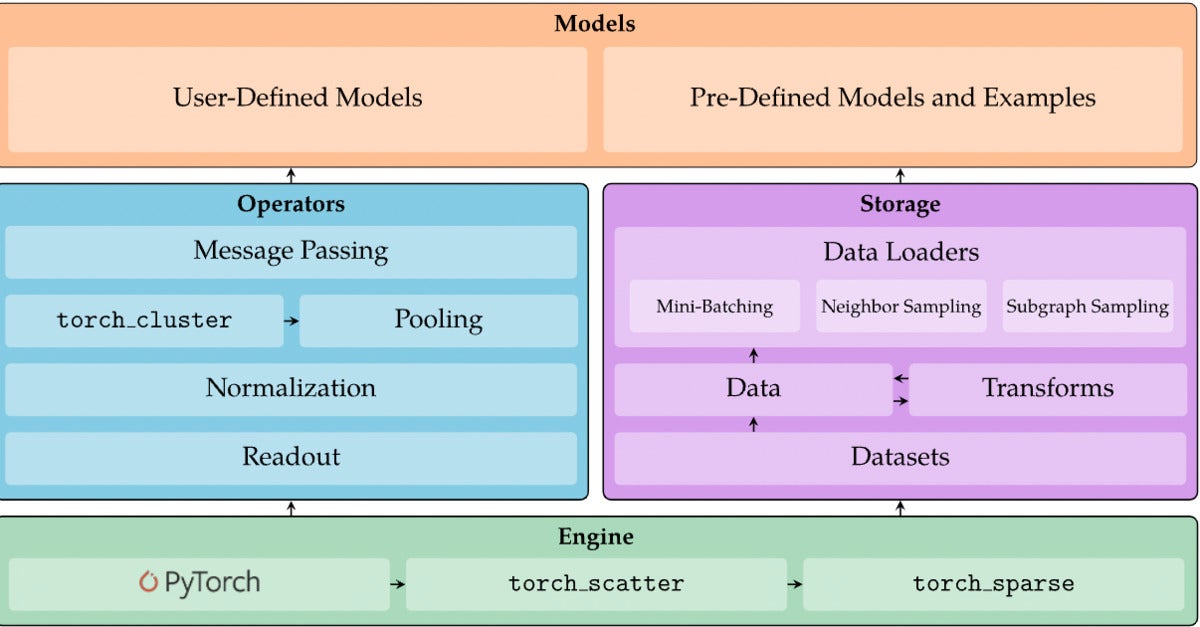

PyTorch Geometric (PyG) is a library that facts experts and others can use to generate and prepare graph neural networks for purposes related to structured data. As explained on its GitHub repository page:

PyG delivers strategies for deep mastering on graphs and other irregular structures, also regarded as geometric deep understanding. In addition, it is made up of straightforward-to-use mini-batch loaders for working on numerous small and one huge graphs, multi GPU-guidance, distributed graph finding out via Quiver, a massive variety of frequent benchmark datasets (primarily based on straightforward interfaces to develop your individual), the GraphGym experiment manager, and helpful transforms, both equally for mastering on arbitrary graphs as effectively as on 3D meshes or issue clouds.

Figure 4 is an overview of PyTorch Geometric’s architecture.

IDG

IDGDetermine 4. The architecture of PyTorch Geometric.

skorch

skorch is a scikit-discover appropriate neural network library that wraps PyTorch. The target of skorch is to make it feasible to use PyTorch with sklearn. If you are familiar with sklearn and PyTorch, you never have to study any new ideas, and the syntax should be well known. Moreover, skorch abstracts absent the education loop, making a great deal of boilerplate code out of date. A basic internet.suit(X, y) is more than enough, as shown in Figure 5.

IDG

IDGDetermine 5. Defining and coaching a neural web classifier with skorch.

Conclusion

Over-all, PyTorch is 1 of a handful of prime-tier frameworks for deep neural networks with GPU support. You can use it for product growth and generation, you can operate it on-premises or in the cloud, and you can discover lots of pre-created PyTorch versions to use as a setting up point for your personal versions.

Copyright © 2022 IDG Communications, Inc.

[ad_2]

Resource link